MIT Developed a Way For Cars To See Through Fog When Human Drivers Can’t

http://ift.tt/2GUlN7u

GIF

Bad weather can make driving extremely dangerous, no matter who’s behind the wheel. But when it comes to dense fog, which makes it all but impossible for human drivers to see more than a few feet ahead of a vehicle, MIT researchers say they have developed a new imaging system that could eventually let autonomous cars see right through the obstruction.

Most autonomous navigation systems use cameras and sensors that rely on images and video feeds generated by visible light. Humans work the same way, which is why fog and mist have been equally problematic for cars with or without a driver in the front seat.

To solve this problem, MIT researchers Guy Satat, Ramesh Raskar, and Matthew Tancik created a new laser-based imaging system that can accurately calculate the distance to objects, even through a thick fog. The system, which is officially being presented in a paper at the International Conference on Computational Photography in Pittsburgh this May, uses short bursts of laser light that are fired away from a camera, and timed for how long it takes them to bounce back.

When the weather’s nice and the path is clear for the laser lightwaves to travel, this time-of-flight approach is a very accurate way to measure the distance to an object. But fog, which is made up of countless tiny water droplets hanging in the air, scatters the light in all directions. The disrupted laser bursts eventually arrive back at the camera at different times, throwing off the distance calculations that are dependent on accurate timing info.

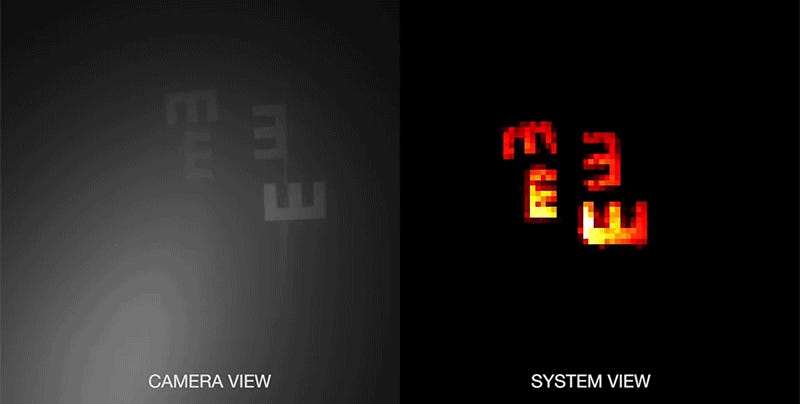

To solve this problem, the MIT researchers say they have developed a new processing algorithm. They discovered that no matter how thick the fog might be, the arrival times of the scattered laser light always adhered to a very specific distribution pattern. A camera counts the number of photons bouncing back to its sensor every trillionth of a second, and when those results are graphed, the system is able to apply specific mathematical filters revealing data spikes that in turn reveal actual objects hidden in the fog.

In an MIT laboratory, the imaging system was tested in a small chamber measuring about a meter long, and it was able to clearly see objects 21 centimeters further away than human eyes could discern. When scaled up to real world dimensions and conditions, where fog never gets as thick as what the researchers had artificially created, the system would be able to see objects far enough head for a vehicle to have plenty of time to safely react and avoid them.

[MIT]

Tech

via Gizmodo http://gizmodo.com

March 21, 2018 at 05:54PM