https://arstechnica.com/?p=1485223

Left to right: Cascade Lake Xeon AP, Cascade Lake Xeon SP, Broadwell Xeon D-1600, and up front Optane DC Persistent Memory.

Intel today launched a barrage of new products for the data center, tackling almost every enterprise workload out there. The company’s diverse range of products highlights how today’s data center is more than just processors, with network controllers, customizable FPGAs, and edge device processors all part of the offering.

The star of the show is the new Cascade Lake Xeons. These were first announced last November, and at the time a dual-die chip with 48 cores, 96 threads, and 12 DDR4 2933 memory channels was going to be the top spec part. But Intel has gone even further than initially planned with the new Xeon Platinum 9200 range: the top-spec part, the Platinum 9282, pairs two 28 core dies for a total of 56 cores and 112 threads. It has a base frequency of 2.6GHz, a 3.8GHz turbo, 77MB of level 3 cache, 40 lanes of PCIe 3.0 expansion, and a 400W power draw.

The new dual die chips are dubbed “Advanced Performance” (AP) and slot in above the Xeon SP (“Scalable Processor”) range. They’ll be supported in two socket configurations for a total of four dies, 24 memory channels, and 112 cores/224 threads. Intel does not plan to sell these as bare chips; instead, the company is going to sell motherboard-plus-processor packages to OEMs. The OEMs are then responsible for adding liquid or air cooling, deciding how densely they want to pack the motherboards, and so on. As such, there’s no price for these chips, though we imagine it’ll be somewhere north of “expensive.”

| Model | Cores/Threads | Clock base/boost/GHz | Level 3 cache/MB | TDP/W | Price |

|---|---|---|---|---|---|

| Platinum 9282 | 56/112 | 2.6/3.8 | 77.0 | 400 | $many |

| Platinum 9242 | 48/96 | 2.3/3.8 | 71.5 | 350 | $many |

| Platinum 9222 | 32/64 | 2.3/3.7 | 71.5 | 250 | $many |

| Platinum 9221 | 32/64 | 2.1/3.7 | 71.5 | 250 | $many |

As well as these new AP parts, Intel is offering a full refresh of the Xeon SP line. The full Cascade Lake SP range includes some 60 different variations, offering different combinations of core count, frequency, level 3 cache, power dissipation, and socket count. At the top end is the Xeon Platinum 8280, 8280M, and 8280L. All three of these have the same basic parameters: 28 cores/56 threads, 2.7/4.0GHz base/turbo, 38.5MB L3, and 205W power. They differ in the amount of memory they support: the bare 8280 supports 1.5TB, the M bumps that up to 2TB, and the L goes up to 4.5TB. The base model comes in at $10,009, with the high memory variants costing more still.

Across the full range, a number of other suffixes pop up, too; N, V, and S are aimed at specific workloads (Networking, Virtualization, and Search, respectively), and T is designed for long-life/reduce-thermal loads. Finally, a few models have a Y suffix. This denotes that they have a feature called “speed select,” which allows applications to be pinned to the cores with the best thermal headroom and highest-possible clock speeds.

Cascade Lake itself is an incremental revision to the Skylake SP architecture. The basic parameters—up to 28 cores/56 threads per die, 1MB level 2 cache per core, up to 38.5MB shared level 3 cache, up to 48 PCIe 3.0 lanes, six DDR4 memory channels, and AVX-512 support—remain the same, but the details show improvement. They support DDR4-2933, up from DDR4-2666, and the standard memory supported is now 1.5TB instead of 768GB. Their AVX-512 support has been extended to include an extension called VNNI (“vector neural network instructions”) aimed at accelerating machine-learning workloads. They also include (largely unspecified) hardware fixes for most variants of the

.

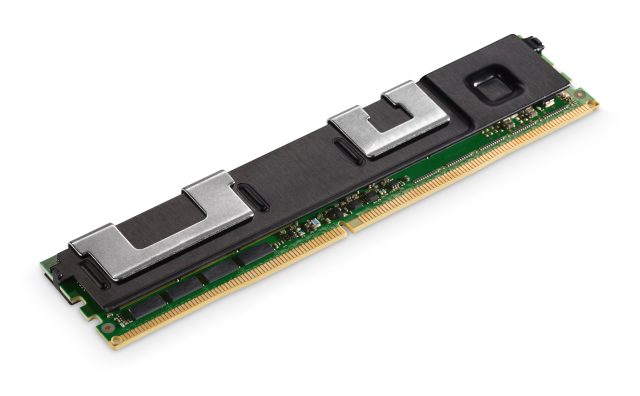

The other big thing that Cascade Lake brings beyond Skylake is support for Optane memory. Most of the Xeon SP range (though, oddly, not the Xeon AP processors) can use Optane DIMMs built to the DDR4-T standard. Optane (also known as 3D XPoint) is a non-volatile solid-state memory technology developed by Intel and Micron. Its promise is to offer density that’s comparable to flash, random access performance that’s within an order of magnitude or two of DDR RAM, and enough write endurance that it can be used in memory-type workloads without failing prematurely. It does all this at a price considerably lower than DDR4.

Intel has been talking about using Optane DIMMs for memory-like tasks for some time, but only today is it finally launching, as Optane DC Persistent Memory. Systems can’t use Optane exclusively—they’ll need some conventional DDR4 as well—but by using the combination they can be readily equipped with vast quantities of memory, using 128, 256, or 512GB Optane DIMMs.

Intel

Applications unaware of non-volatile memory can use the Optane and DDR4 as a single giant pool of memory. Behind the scenes, the DDR4 will cache the Optane, and the overall effect will be simply that a machine has an awful lot of memory that’s a little slower than regular memory. Alternatively, applications can be written to explicitly use non-volatile memory and will have direct access to the Optane, using it as a kind of giant, randomly accessible, high-speed disk.

To alleviate any concerns about endurance, Intel is offering a 5-year warranty for Optane DC Memory, even for parts that have been running at their peak write performance for the entire five years.

Intel also announced some refreshes to the Xeon D systems-on-chips first launched in 2015. In 2015, Intel launched the Broadwell-based Xeon D 1500 line. Last year, these were joined by the Skylake SP-based Xeon D 2100 line. The 2100 line offered a significant upgrade in performance and memory capacity but with much higher power draws, too.

Today comes the Xeon D 1600 line, direct replacements for the 1500 parts. Surprisingly, these new 1600 parts continue to use the same Broadwell architecture as their predecessors; they’re aimed at the same kinds of storage and networking workloads, with two to eight cores/16 threads, up to 128GB RAM, and power draws between 27 and 65W.

As well as the processor cores, they include (depending on which exact model you look at) four 10GbE Ethernet controllers, Intel Quick Assist Technology acceleration of compression and encryption workloads, six SATA 3 channels, four each of USB 3.0 and 2.0 ports, 24 lanes of PCIe 3.0, and eight lanes of PCIe 2.0.

Intel

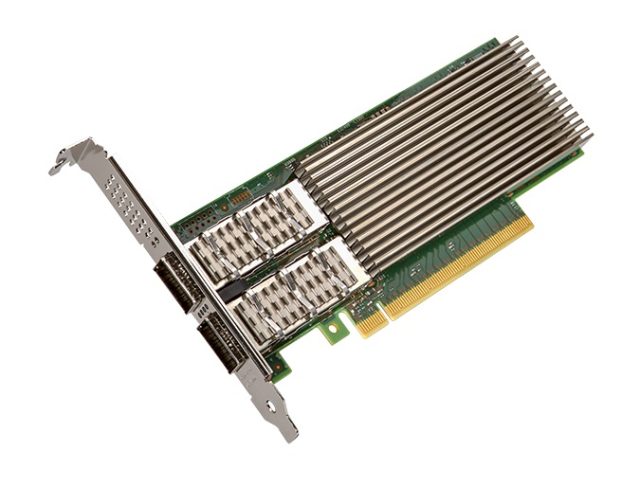

Announced today but coming in the third quarter is a new Intel Ethernet controller. The 800 series, codenamed Columbiaville, will support 100Gb Ethernet. These controllers are rather more programmable than your typical Ethernet controller, with customizable software-controlled packet parsing happening within the Ethernet controller itself. That means the chip can send a packet for further processing, reroute it to a different destination, or do whatever an application needs, all without the involvement of the host processor at all. The controllers also support application-defined queues and rate limits, so complex application-specific prioritization can be enforced.

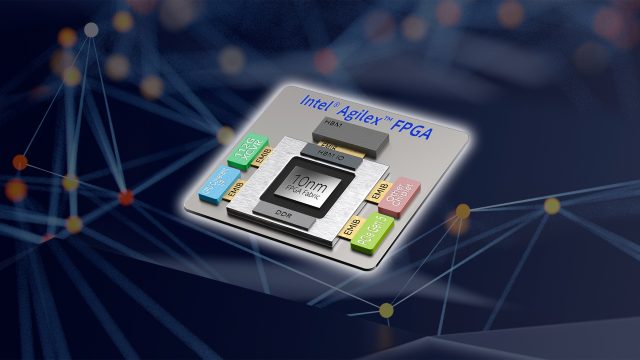

For its final data center offering, Intel announced the Agilex FPGA (field programmable gate array—a processor that can have its internal wiring reconfigured on the fly), built using the company’s 10nm process. These chips offer up to 40TFLOPS of number-crunching performance and enable developers to build a wide range of application-specific accelerators. The FPGAs will sport a range of optional capabilities, such as containing four ARM Cortex-A53 cores, PCIe generation 4 or 5, DDR4, DDR5, and Optane DC Persistent memory, with an option for HBM high bandwidth memory mounted on-chip and cache coherent interconnects to attach them to Xeon SP chips.

For machine-learning workloads, they’ll support a range of low-precision integer and floating point formats. Further customization will come from the ability to work with Intel and directly embed custom chiplets into the FPGAs.

Intel

Over the last few years, FPGAs have become increasingly common, especially in the cloud data centers operated by the likes of Microsoft, Google, and Amazon, as they offer a useful midpoint between the enormous flexibility of software-based computation and the enormous performance of hardware-based acceleration; they offer flexible acceleration of things like networking, encryption, and machine-learning workloads in a manner that is readily upgraded and altered to adapt to new algorithmic requirements and models.

Intel plans to have these available from the third quarter.

via Ars Technica https://arstechnica.com

April 2, 2019 at 05:26PM