The idea of a balloon that floats high up above Earth indefinitely is a tantalizing one. Solar power would allow such stratospheric balloons to operate like low-cost satellites at the edge of space, where they could provide communication in remote or disaster-hit area, follow hurricanes, or monitor pollution at sea. One day, they could even take tourists on near-space trips to see the curvature of the planet.

It’s not a new idea. Indeed, the original stratospheric balloons were flown by NASA in the 1950s, and the agency still uses them for science missions. And Project Loon, owned by Google’s parent company Alphabet, successfully deployed such balloons to provide mobile communications in the aftermath of Hurricane Maria in Puerto Rico.

There’s a major snag, though: current balloons shift with the wind and can only stay in one area for a few days at a time. At the height of the stratosphere, some 60,000 feet (18,300 meters) up, winds blow in different directions at different altitudes. In theory it should be possible to find a wind blowing in any desired direction simply by changing altitude. But while machine learning and better data are improving navigation, the progress is gradual.

DARPA, the US military’s research arm, thinks it may have cracked the problem. It is currently testing a wind sensor that could allow devices in its Adaptable Lighter-Than-Air (ALTA) balloon program to spot wind speed and direction from a great distance and then make the necessary adjustments to stay in one spot. DARPA has been working on ALTA for some time, but its existence was only revealed in September.

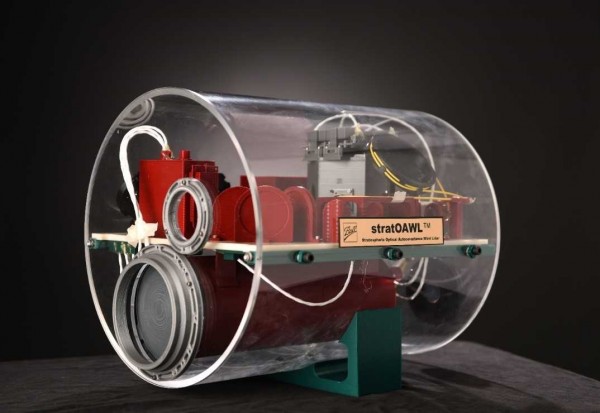

Ball Aerospace

“By flying higher we hope to take advantage of a larger range of winds,” says ALTA project manager Alex Walan. ALTA will operate even higher than Loon at 75,000 to 90,000 feet (22,900 to 27,400 meters or 14 to 17 miles), where the winds are less predictable. That shouldn’t be a problem if the balloon can see exactly where the favorable winds are.

The wind sensor, called Strat-OAWL (short for “stratospheric optical autocovariance wind lidar”), is a new version of one originally designed for NASA satellites. Made by Ball Aerospace, OAWL shines pulses of laser light into the air. A small fraction of the beam is reflected back, and the reflected laser light is gathered by a telescope. The wavelength of the reflected light is changed slightly depending on how fast the air it bounced back from is moving, a change known as doppler shift. By analyzing this shift, OAWL can determine the speed and direction of the wind.

Unlike other wind sensors, OAWL looks in two directions at once, giving a better indication of wind speed and direction.

“It’s like looking out with two eyes open instead of one,” says Sara Tucker, a lidar systems engineer at Ball Aerospace.

Sign up for The Download

Your daily dose of what’s up in emerging technology

By signing up you agree to receive email newsletters and

notifications from MIT Technology Review. You can change your preferences at any time. View our

Privacy Policy for more detail.

Previous versions of OAWL flown in aircraft have measured winds more than 14 kilometers (8.6 miles) away with an accuracy of better than a meter per second. The main challenge with Strat-OAWL has been shrinking it to fit the space, weight, and power requirements of the ALTA balloons.

Walan was not able to discuss military roles for ALTA technology, but a high-resolution sensor permanently positioned 15 miles above a war zone would be a useful asset. Military aircraft have ceilings of 60,000 to 65,000 feet, so they could intercept Loon-type balloons. Because it will fly higher, ALTA will be a much trickier target. The balloon could provide secure communications and navigation or act as a mother ship for drones.

The ALTA test flight program has already begun, with flights lasting up to three days, and will continue with steadily longer flights.

The technology might have applications beyond the military, too. Some companies, such as WorldView, are talking about “near-space tourism,” taking a passenger capsule to altitudes where the blackness of space and the curvature of the Earth can be seen. Reliable navigation of the sort provided by OAWL would make such trips a far safer prospect. It could also give commercial airliners a tool to spot and avoid clear air turbulence.

The ALTA balloon itself is made by Raven Aerostar, which also makes the Loon balloons. The firm’s general manager, Scott Wickersham, says this sort of technology gets us much closer to balloons that stay aloft indefinitely—and that will make all sort of applications possible.

“I believe we will see a future in which the stratospheric balloons will be as common as commercial airliners are today,” he says.

via Technology Review Feed – Tech Review Top Stories https://ift.tt/1XdUwhl

November 14, 2018 at 12:22PM

blog comments powered by Disqus