If you’ve purchased a laptop or tablet with an AMD Ryzen chip inside, there’s a performance tweak you absolutely need to know about. Apply it to AMD’s AI/gaming powerhouse Ryzen AI Max chip, and you can score enormous performance gains in just seconds!

Savvy gamers know instinctively that you can boost your game’s frame rate by lowering the resolution or the visual quality, or by making an adjustment to the Windows power-performance slider. But the Ryzen AI Max is a new kind of device: a killer mobile processor that can run modern games at elevated frame rates, and serve as an AI powerhouse.

What’s the secret? A simple adjustment of the Ryzen AI Max’s unified frame buffer, or available graphics memory. While it’s a simple fix, in my tests, it made an enormous difference: up to a 60 percent performance boost in some cases.

When I was comparing Intel’s “Arrow Lake” mobile processor to AMD’s Ryzen AI 300 processor, I was unable to perform certain AI tests because of the memory and VRAM requirements those tests required. After I published the story, AMD reached out to suggest dialing up the VRAM via the laptop BIOS to enable the test to run. (As it turned out, this didn’t make a difference in that specific test.)

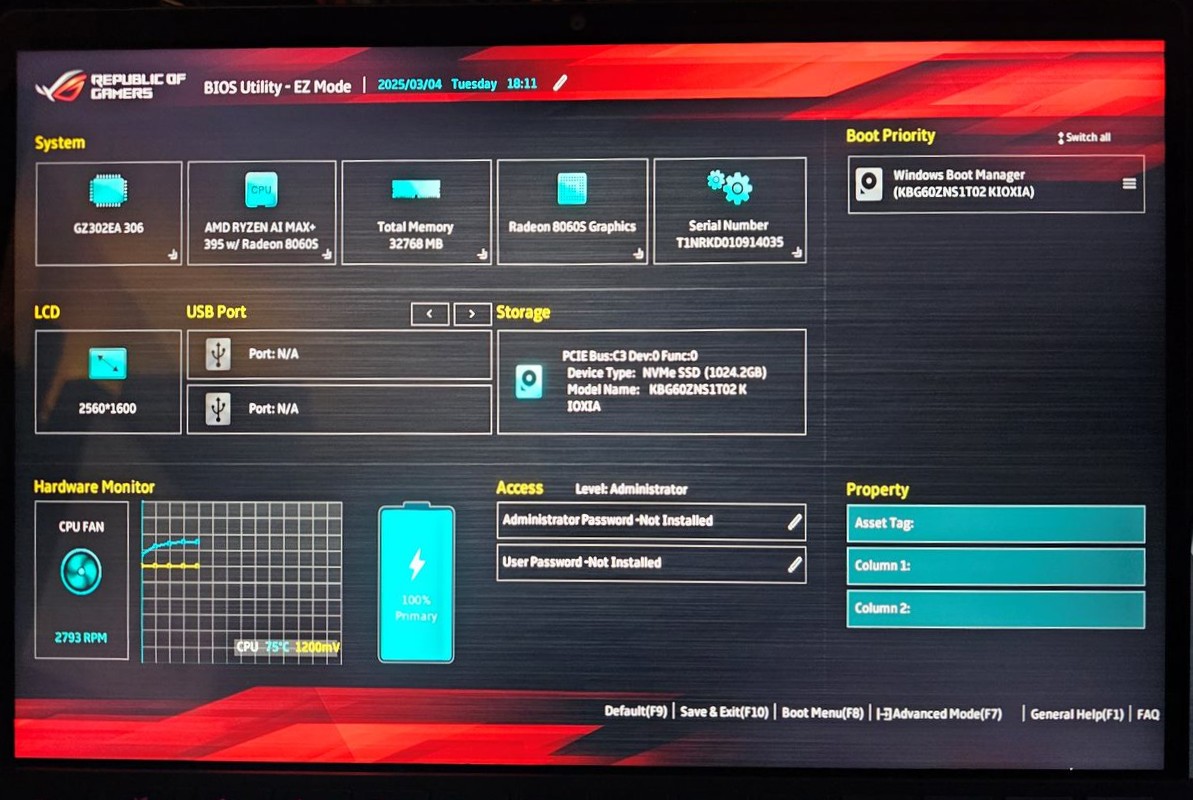

When Asus ROG provided me an Asus ROG Flow Z13 gaming tablet for testing AMD’s Ryzen AI Max+ chip, the topic surfaced again. For whatever reason, Asus had configured its tablet with just 4GB of available video memory. The company recommended we test with 8GB instead. It seemed to be an opportune time to see what effects adjusting the Ryzen AI Max’s graphics memory would have.

Why does video memory matter?

VRAM stands for video RAM. If you use a discrete GPU, the amount of VRAM is predetermined by your graphics-card manufacturer, and the VRAM chips are soldered right onto the board. Some people use “VRAM” as shorthand when talking about integrated graphics, as well. That’s not entirely accurate; integrated graphics share memory between the PC’s main memory and the video logic, and the “VRAM” in this case is known as the “UMA frame buffer.”

VRAM (and the UMA frame buffer) stores the textures used by a PC game inside your graphics card, allowing them to be quickly accessed and rendered upon the screen. If the texture size exceeds the amount of VRAM, your PC must pull them in from somewhere else (RAM or the SSD), slowing down gameplay.

In the case of AI, the VRAM acts a lot like standard PC RAM, storing the weights and models of an AI algorithm, and allowing it to run. In many cases, insufficient VRAM means that a particular LLM might not run. In both cases, though, the amount of memory available to the GPU matters.

If you own a discrete graphics card from any manufacturer, the amount of VRAM can’t be adjusted. On Intel Core systems, the available UMA frame buffer is also typically fixed, to half of the available system memory. In other words, if you have an Intel Arrow Lake laptop with 16GB of total memory, up to 8GB is accessible by the integrated GPU. And yes, adding more system memory increases the size of the UMA frame buffer. However, you still can’t adjust the UMA frame buffer’s size to your own preferences.

Mark Hachman / Foundry

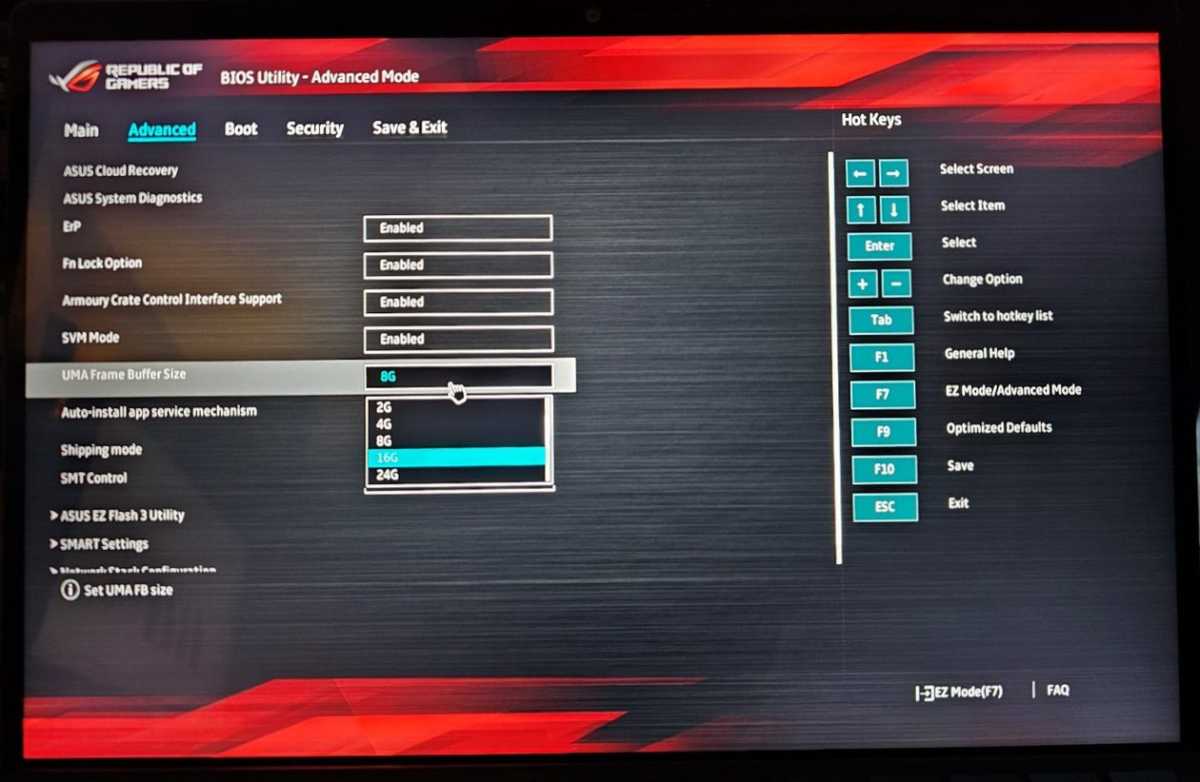

AMD, on the other hand, typically allows you to adjust the size of the UMA frame buffer on its Ryzen processors (and not just the AI Max!), typically through adjustments made to the firmware. In my case, I obtained access to the UEFI/BIOS just by rebooting the tablet and tapping the F2 key as it rebooted.

On the ROG tablet, I found the adjustment in the advanced settings. Several options were available, from an “Auto” setting to 24GB, with several increments in between. As I noted above, Asus had accidentally sent out its tablets with the 4GB setting enabled. All I did was select a new size for the frame buffer, save, and the boot cycle continued.

Mark Hachman / Foundry

Warning: If you own a handheld PC like a Steam Deck, adjusting the UMA frame buffer to improve game performance isn’t that unusual. Making adjustments to the BIOS/UEFI does carry some risk, however. In this case, allocating too much memory to the GPU might not allow an application to run at all, or cause instability. You’re probably safe leaving 8GB reserved for main memory.

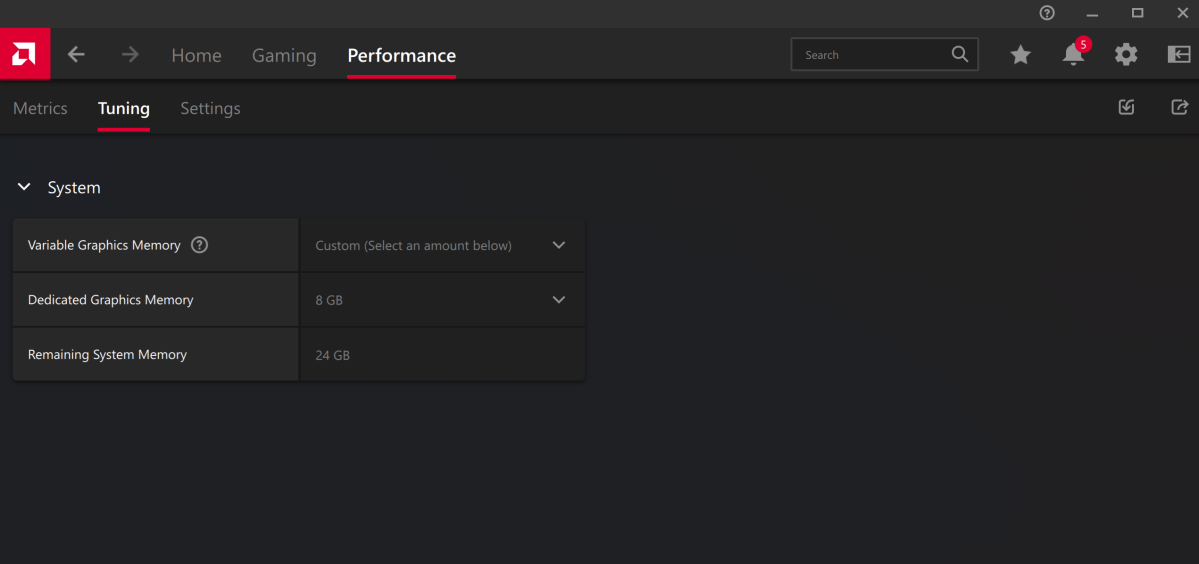

If you don’t want to fiddle around in your laptop’s BIOS, you might find that your laptop or tablet ships with the AMD Adrenalin utility. In this case, you might be able to adjust the graphics memory from within the application itself. I found it within the Performance > Tuning tab. If you’re confused about how much memory you’re allocating to graphics and how much is left for your PC, the utility helps make that clear.

Mark Hachman / Foundry

Tested: Does adjusting the UMA frame buffer make a difference?

I’m currently in the process of reviewing the Asus ROG Flow Z13 and its AMD Ryzen AI Max+ chip, but I paused to check to see what improvements, if any, could be made by adjusting the size of the UMA frame buffer. In general, I found that adjusting it made the most improvement inside games and especially AI.

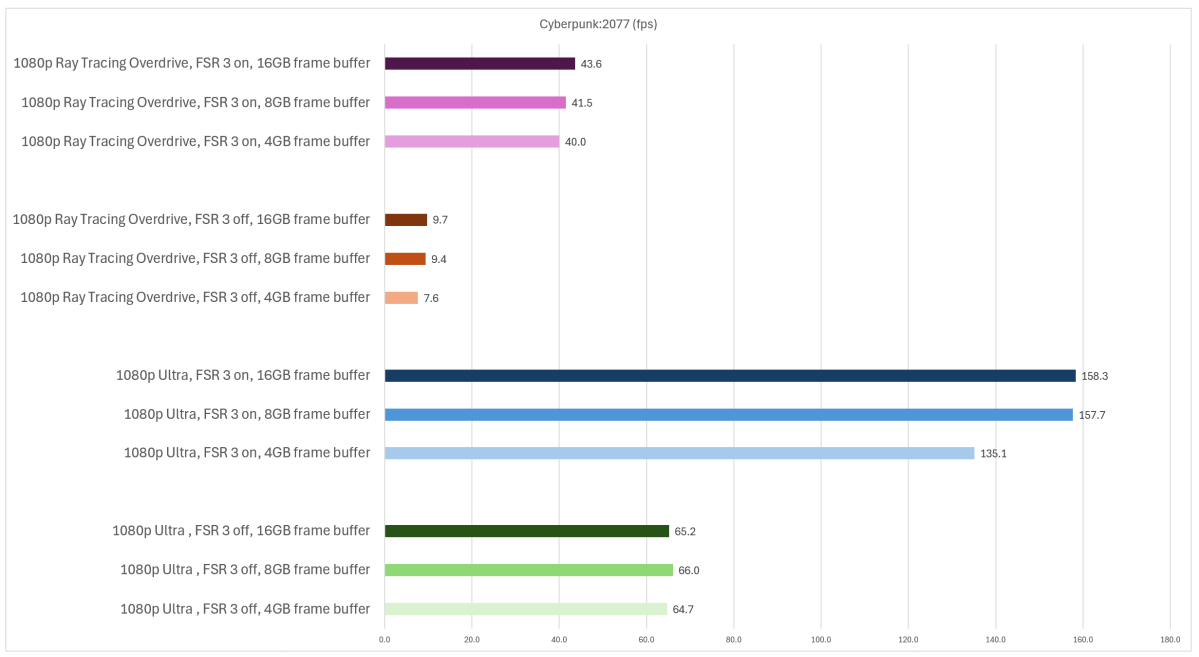

I didn’t see enormous gains in synthetic graphics benchmarks: Tweaking the UMA frame buffer using 3DMark’s Steel Nomad test boosted scores from 1,643 to 1,825, or about 11 percent. Ditto for PCMark 10, which measures productivity: The needle barely moved. I turned to Cyberpunk: 2077 to test how adjusting the UMA frame buffer applied to games.

I ran four benchmarks, each using a UMA frame buffer of 4GB, 8GB, or 16GB. The idea was to see what the chip could do on its own, just rasterizing the game, as well as turning on all of the GPU’s AI enhancements to maximize frame rate:

- 1080p resolution, Ultra settings, with all enhancements turned off

- 1080p resolution, Ultra settings, with all enhancements (AMD FSR 3 scaling and frame generation) turned on

- 1080p resolution, Ray Tracing Overdrive settings, with all enhancements turned off

- 1080p resolution, Ray Tracing Overdrive settings, with all enhancements turned on

Mark Hachman / Foundry

You can immediately see that the largest improvements in overall frame rate simply come from turning on AMD’s FidelityFX Super Sampling 3 (FSR 3). But adjusting the frame buffer gives you about a 10 percent boost in the Ray Tracing Overdrive mode. More importantly, it bumped up the frame rate on the 1080p Ultra settings, with FSR 3 on, by 17 percent. That’s all from a free, easy adjustment that costs you nothing.

It’s worth pointing out that adjusting the UMA frame buffer doesn’t always scale. It seems to under the Ray Tracing Overdrive setting, but the same gains don’t play out elsewhere. I’ve found Cyberpunk‘s tests to be quite reproducible, so I’m inclined to believe that it’s not just statistical variance.

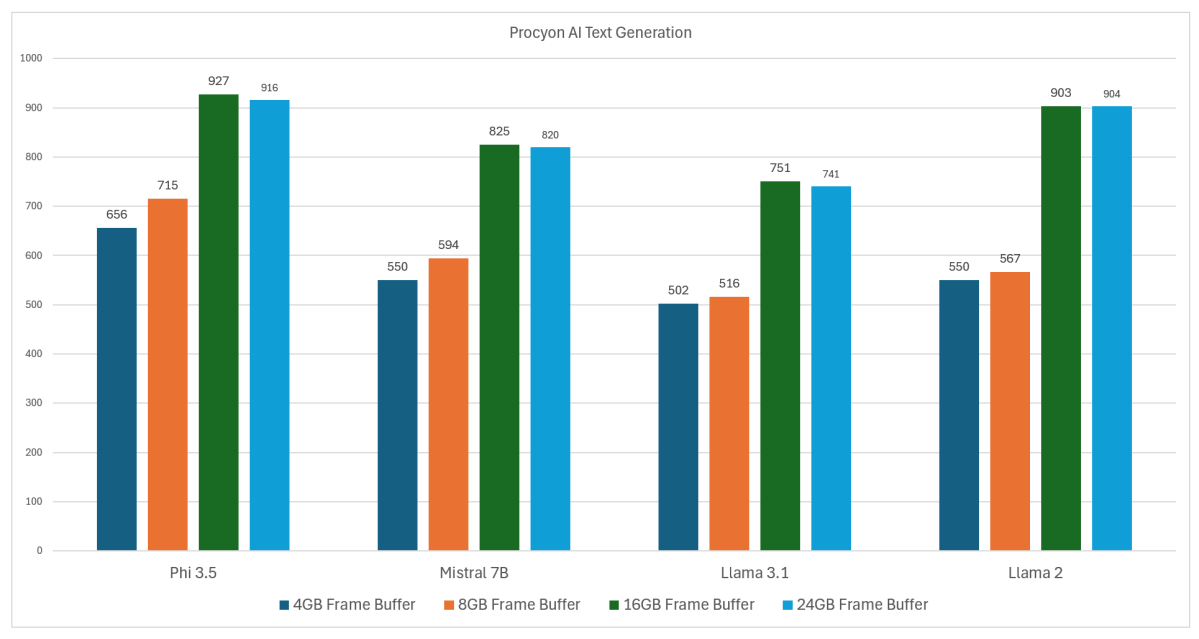

I saw the same thing when testing AI, too. I used UL Procyon’s AI Text Generation benchmark, which loads in four separate LLMs or AI chatbots, and asks a series of questions. UL generates its own scores, based on speed and token output. Here, there was an amazing jump in overall performance in places: 64 percent in Llama 2 just by dialing up the frame buffer! But it was also interesting that the performance increase was “capped” at 16GB. Selecting a 24GB frame buffer actually lowered the performance slightly.

Mark Hachman / Foundry

(It might be worth noting that Procyon didn’t think it could run on the Ryzen AI Max, because the system didn’t report enough VRAM. It ran just fine, of course.)

I also checked my work using MLCommons’ own client benchmark, which uses a 7 billion-parameter LLM to generate “scores” of tokens per second and an initial “time to first token.” I didn’t see as much improvement — just about 10 percent from the 4GB to 8GB buffer, and then virtually nothing when I tested it with a 16GB buffer instead.

Integrated graphics matters again

Until now, adjusting the UMA frame buffer was largely irrelevant: Integrated GPUs were simply not powerful enough for that tweak to matter. Things are different now.

Again, if you’re a handheld PC user, you may have seen others adjusting their UMA frame buffer to eke out a bit more performance. But as my colleague Adam Patrick Murray commented, the Ryzen AI Max+ processor inside the Asus ROG Flow Z13 tablet is an odd hybrid of a handheld and a tablet. It’s more than that, of course, given that companies like Framework are putting it in small form-factor PCs.

But lessons learned from the handheld PC space do apply — and well! — to this new class of AI powerhouse chips. Adjusting the UMA frame buffer on an AMD Ryzen AI Max+ chip can be a terrific free tweak that can boost your performance by dramatic amounts.

via PCWorld https://www.pcworld.com

March 7, 2025 at 07:07AM