What if you could listen to music or a podcast without headphones or earbuds and without disturbing anyone around you? Or have a private conversation in public without other people hearing you?

Our newly published research introduces a way to create audible enclaves – localized pockets of sound that are isolated from their surroundings. In other words, we’ve developed a technology that could create sound exactly where it needs to be.

The ability to send sound that becomes audible only at a specific location could transform entertainment, communication and spatial audio experiences.

What is sound?

Sound is a vibration that travels through air as a wave. These waves are created when an object moves back and forth, compressing and decompressing air molecules.

The frequency of these vibrations is what determines pitch. Low frequencies correspond to deep sounds, like a bass drum; high frequencies correspond to sharp sounds, like a whistle.

Daniel A. Russell, CC BY-NC-ND

Controlling where sound goes is difficult because of a phenomenon called diffraction – the tendency of sound waves to spread out as they travel. This effect is particularly strong for low-frequency sounds because of their longer wavelengths, making it nearly impossible to keep sound confined to a specific area.

Certain audio technologies, such as parametric array loudspeakers, can create focused sound beams aimed in a specific direction. However, these technologies will still emit sound that is audible along its entire path as it travels through space.

The science of audible enclaves

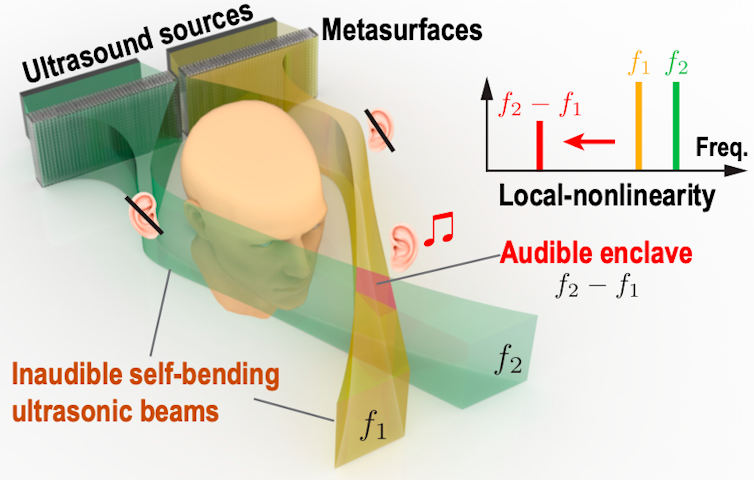

We found a new way to send sound to one specific listener: through self-bending ultrasound beams and a concept called nonlinear acoustics.

Ultrasound refers to sound waves with frequencies above the human hearing range, or above 20 kHz. These waves travel through the air like normal sound waves but are inaudible to people. Because ultrasound can penetrate through many materials and interact with objects in unique ways, it’s widely used for medical imaging and many industrial applications.

In our work, we used ultrasound as a carrier for audible sound. It can transport sound through space silently – becoming audible only when desired. How did we do this?

Normally, sound waves combine linearly, meaning they just proportionally add up into a bigger wave. However, when sound waves are intense enough, they can interact nonlinearly, generating new frequencies that were not present before.

This is the key to our technique: We use two ultrasound beams at different frequencies that are completely silent on their own. But when they intersect in space, nonlinear effects cause them to generate a new sound wave at an audible frequency that would be heard only in that specific region.

Jiaxin Zhong et al./PNAS, CC BY-NC-ND

Crucially, we designed ultrasonic beams that can bend on their own. Normally, sound waves travel in straight lines unless something blocks or reflects them. However, by using acoustic metasurfaces – specialized materials that manipulate sound waves – we can shape ultrasound beams to bend as they travel. Similar to how an optical lens bends light, acoustic metasurfaces change the shape of the path of sound waves. By precisely controlling the phase of the ultrasound waves, we create curved sound paths that can navigate around obstacles and meet at a specific target location.

The key phenomenon at play is what’s called difference frequency generation. When two ultrasonic beams of slightly different frequencies, such as 40 kHz and 39.5 kHz, overlap, they create a new sound wave at the difference between their frequencies – in this case 0.5 kHz, or 500 Hz, which is well within the human hearing range. Sound can be heard only where the beams cross. Outside of that intersection, the ultrasound waves remain silent.

This means you can deliver audio to a specific location or person without disturbing other people as the sound travels.

Advancing sound control

The ability to create audio enclaves has many potential applications.

Audio enclaves could enable personalized audio in public spaces. For example, museums could provide different audio guides to visitors without headphones, and libraries could allow students to study with audio lessons without disturbing others.

In a car, passengers could listen to music without distracting the driver from hearing navigation instructions. Offices and military settings could also benefit from localized speech zones for confidential conversations. Audio enclaves could also be adapted to cancel out noise in designated areas, creating quiet zones to improve focus in workplaces or reduce noise pollution in cities.

This isn’t something that’s going to be on the shelf in the immediate future. For instance, challenges remain for our technology. Nonlinear distortion can affect sound quality. And power efficiency is another issue – converting ultrasound to audible sound requires high-intensity fields that can be energy intensive to generate.

Despite these hurdles, audio enclaves present a fundamental shift in sound control. By redefining how sound interacts with space, we open up new possibilities for immersive, efficient and personalized audio experiences.

Jiaxin Zhong, Postdoctoral Researcher in Acoustics, Penn State and Yun Jing, Professor of Acoustics, Penn State. This article is republished from The Conversation under a Creative Commons license. Read the original article.

via Gizmodo https://gizmodo.com/

March 20, 2025 at 09:05AM