Facebook used AI for an eye-opening trick

https://ift.tt/2JUb7WR

Facebook used AI for an eye-opening trick

Tech

via Technology Review Feed – Tech Review Top Stories https://ift.tt/1XdUwhl

June 18, 2018 at 11:57AM

For everything from family to computers…

Facebook used AI for an eye-opening trick

https://ift.tt/2JUb7WR

Tech

via Technology Review Feed – Tech Review Top Stories https://ift.tt/1XdUwhl

June 18, 2018 at 11:57AM

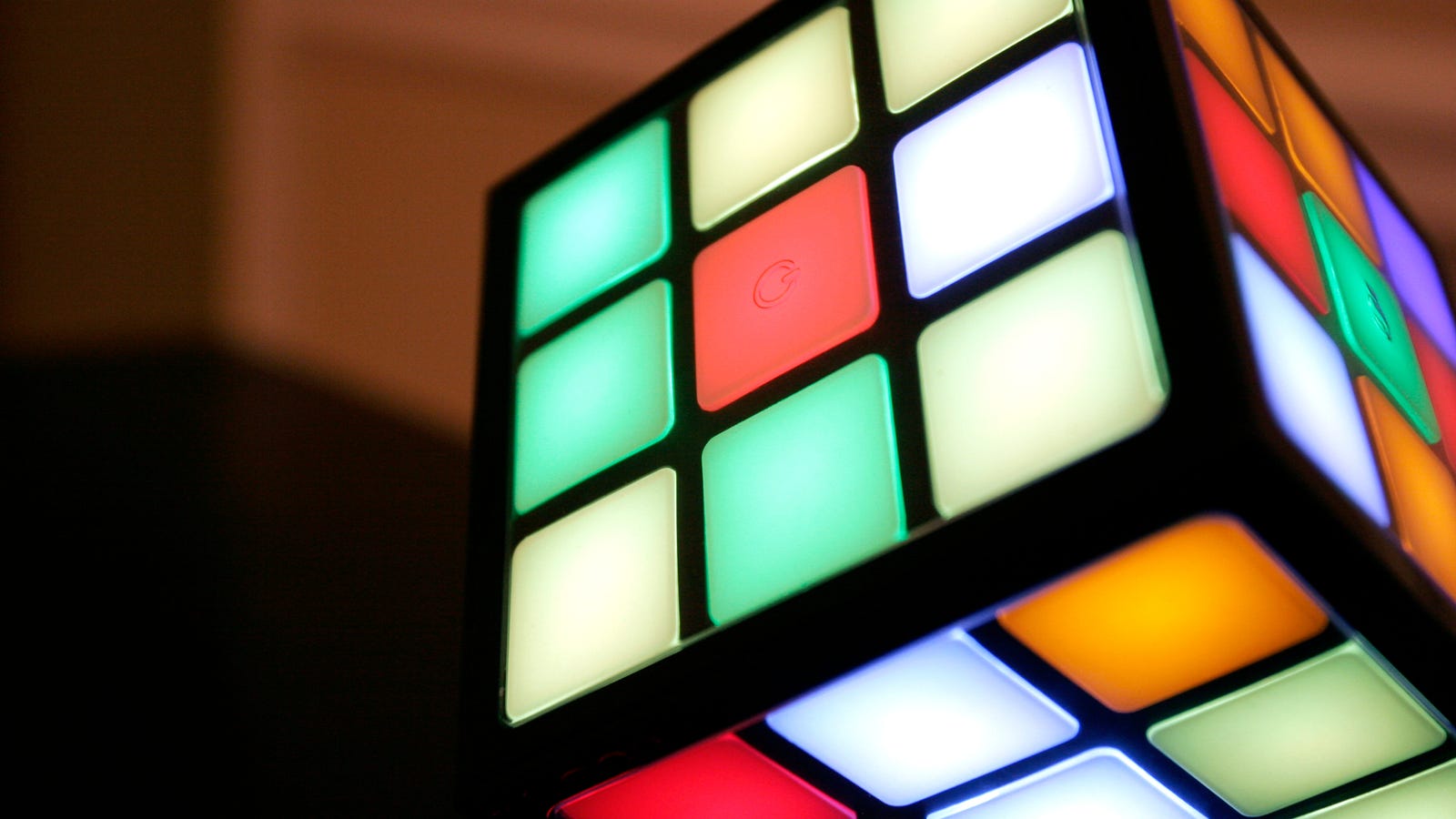

Self-Taught AI Masters Rubik’s Cube in Just 44 Hours

https://ift.tt/2JLHXND

Meet DeepCube, an artificially intelligent system that’s as good at playing the Rubik’s Cube as the best human master solvers. Incredibly, the system learned to dominate the classic 3D puzzle in just 44 hours and without any human intervention.

“A generally intelligent agent must be able to teach itself how to solve problems in complex domains with minimal human supervision,” write the authors of the new paper, published online at the arXiv preprint server. Indeed, if we’re ever going to achieve a general, human-like machine intelligence, we’ll have to develop systems that can learn and then apply those learnings to real-world applications.

And we’re getting there. Recent breakthroughs in machine learning have produced systems that, without any prior knowledge, have learned to master games like chess and Go. But these approaches haven’t translated very well to the Rubik’s Cube. The problem is that reinforcement learning—the strategy used to teach machines to play chess and Go—doesn’t lend itself well to complex 3D puzzles. Unlike chess and Go—games in which it’s relatively easy for a system to determine if a move was “good” or “bad”—it’s not immediately clear to an AI that’s trying to solve the Rubik’s Cube if a particular move has improved the overall state of the jumbled puzzle. When an artificially intelligent system can’t tell if a move is a positive step towards the accomplishment of an overall goal, it can’t be rewarded, and if it can’t be rewarded, reinforcement learning doesn’t work.

On the surface, the Rubik’s Cube may seem simple, but it offers a staggering number of possibilities. A 3x3x3 cube features a total “state space” of 43,252,003,274,489,856,000 combinations (that’s 43 quintillion), but only one state space matters—that magic moment when all six sides of the cube are the same color. Many different strategies, or algorithms, exist for solving the cube. It took its inventor, Erno Rubik, an entire month to devise the first of these algorithms. A few years ago, it was shown that the fewest number of moves to solve the Rubik’s Cube from any random scramble is 26.

We’ve obviously acquired a lot of information about the Rubik’s Cube and how to solve it since the highly addictive puzzle first appeared in 1974, but the real trick in artificial intelligence research is to get machines to solve problems without the benefit of this historical knowledge. Reinforcement learning can help, but as noted, this strategy doesn’t work very well for the Rubik’s Cube. To overcome this limitation, a research team from the University of California, Irvine, developed a new AI technique known as Autodidactic Iteration.

“In order to solve the Rubik’s Cube using reinforcement learning, the algorithm will learn a policy,” write the researchers in their study. “The policy determines which move to take in any given state.”

To formulate this “policy,” DeepCube creates its own internalized system of rewards. With no outside help, and with the only input being changes to the cube itself, the system learns to evaluate the strength of its moves. But it does so in a rather ingenious, although labor intensive, way. When the AI conjures up a move, it actually jumps all the way forward to the completed cube and works its way backward to the proposed move. This allows the system to evaluate the overall strength and proficiency of the move. Once it has acquired a sufficient amount of data in regards to its current position, it uses a traditional tree search method, in which it examines each possible move to determine which one is the best, to solve the cube. It’s not the most elegant system in the world, but it works.

The researchers, led by Stephen McAleer, Forest Agostinelli, and Alexander Shmakov, trained DeepCube using two million different iterations across eight billion cubes (including some repeats), and it trained for a period of 44 hours on a machine that used a 32-core Intel Xeon E5-2620 server with three NVIDIA Titan XP GPUs.

The system discovered “a notable amount of Rubik’s Cube knowledge during its training process,” write the researchers, including a strategy used by advanced speedcubers, namely a technique in which the corner and edge cubelets are matched together before they’re placed into their correct location. “Our algorithm is able to solve 100 percent of randomly scrambled cubes while achieving a median solve length of 30 moves —less than or equal to solvers that employ human domain knowledge,” write the authors. There’s room for improvement, as DeepCube experienced trouble with a small subset of cubes that resulted in some solutions taking longer than expected.

Looking ahead, the researchers would like to test the new Autodidactic Iteration technique on harder, 16-sided cubes. More practically, this research could be used to solve real-world problems, such as predicting the 3D shape of proteins. Like the Rubik’s Cube, protein folding is a combinatorial optimization problem. But instead of figuring out the next place to move a cubelet, the system could figure out the proper sequence of amino acids along a 3D lattice.

Solving puzzles is all fine and well, but the ultimate goal is to have AI tackle some of the world’s most pressing problems, like drug discovery, DNA analysis, and building robots that can function in a human world.

[arXiv via MIT Technology Reivew]

Tech

via Gizmodo http://gizmodo.com

June 18, 2018 at 12:03PM

Nvidia Is Using AI to Perfectly Fake Slo-Mo Videos

https://ift.tt/2I46kR0

One of the hardest video effects to fake is slow motion. It requires software to stretch out a clip by creating hundreds of non-existent in-between frames, and the results are often stuttered and unconvincing. But taking advantage of the incredible image-processing potential of deep learning, Nvidia has come up with a way to fake flawless slow motion footage from a standard video clip. It’s good thing The Slo-Mo Guys both have day jobs to fall back on.

Slowing a video clip from 30 frames per second to 24o frames per second requires the creation of 210 additional frames, or seven in-betweens for every frame originally captured. Simply blending or morphing the before and after frames to create the new interstitials just isn’t enough to keep the motion as buttery smooth as real slow-mo footage appears. This is why slow motion in sports always looks far less cinematic than it does in the movies.

Plugins for high-end visual effects applications, like RE:Vision Effect’s Twixtor, are able to improve the results of faked slow motion, but they require complex analysis of the motion in the clip, and often take hours to render. Nvidia has taken a different approach, and based on the results in the sample footage it’s released, an even better one.

Using a deep-learning AI that was trained on over 11,000 reference videos of slo-mo sports footage filmed natively at 240-frames per second, the neural network was able to predict how the 210 missing in-between frames were supposed to look, based on the preceding and following frames.

Smartphones and even high-end digital cameras are already able to capture slow-motion footage at these speeds, but as the framerate increases, the resolution drops due to the high bandwidth of data being created on the fly. Nvidia’s AI approach is a much cheaper alternative to dropping tens of thousands of dollars on a high-speed camera from the likes of Phantom because it all happens after the video is recorded. The results aren’t as instant as with a high-speed camera, even with Nvidi’s high-end graphics processors powering the AI, it still needs time to process. But as smartphones become increasingly more powerful, eventually you’ll be able to fake stunning 8K slo-mo footage with a simple button click.

[Nvidia via Prosthetic Knowledge]

Tech

via Gizmodo http://gizmodo.com

June 18, 2018 at 02:03PM

Our First Look at What Feels Like the First Star Trek Action Figures in Ages

https://ift.tt/2M3ukX2

There’s plenty of Trek merchandise out there, from giant ship replicas, to fancy dolls, to actual meme statuettes. But for honest to god action figures, it feels like it’s been years since there’s been a line of Starfleet’s finest small enough to command your valuable desk space. That’s finally changing thanks to McFarlane Toys.

It’s been about a year since McFarlane announced it had acquired the rights to make new Star Trek figures, including characters from the then-upcoming CBS All Access series Discovery. After the company showed up to events like Toy Fair with little more than empty boxes, we’ve finally got our first look at the two figures that will launch the company’s new Trek line of 7″ posable toys: fan favorite Captains James T. Kirk and Jean-Luc Picard.

Kirk and Picard both feature a range of articulation and accessories that allow you to pose them boldly going, one stiff plastic step at a time, and feature pretty decent likenesses of William Shatner and Patrick Stewart as they appeared on their respective series (although the Stewart sculpt looks much more like him from the side rather than head-on).

Kirk comes with a classic-style communicator, the type II phaser pistol, as well as the classic, wonderfully goofy-looking phaser rifle. Meanwhile, Picard gets a little less, thanks to Starfleet’s comms badge solving the problem of handheld communicators between Star Trek and The Next Generation—he simply gets the more modern evolution of the phaser pistol, as well as the Ressikan flute Picard acquired during the events of the beloved episode “The Inner Light.”

As a bonus, the way Picard’s phaser-holding hand is posed makes it double as a good “make it so” ordering-hand:

What good’s a Star Trek action figure if it can’t look like it’s giving orders?

The line is expected to continue with a figure based on Discovery’s Michael Burnham, but first, Picard and Kirk will hit shelves this summer for around $20 each. How long until we get the dream figure we’re all waiting for, though: Captain Sisko with Avery Brooks laugh sound FX? Because I’d buy both a bald and season one-three hair variant of that.

[Toyark]

Tech

via Gizmodo http://gizmodo.com

June 18, 2018 at 10:57PM

This $20 Car Charger Changes Colors, Remembers Where You Parked, and Monitors Your Car Battery

https://ift.tt/2tez6sS

Just when you thought you had car chargers pegged, Anker went out and made the smartest one you’ve ever seen.

Similar to the Nonda Zus, the Roav by Anker SmartCharge Spectrum connects to your phone over Bluetooth while you drive. When you turn the car off and the Bluetooth connection breaks, the Roav app will mark down your parking location on a map, so you can find your way back.

Perhaps more usefully, every time you start your car, the SmartCharge will also log the health of your car battery, so you can track its charge over time from your phone, and get a replacement ready before you get stranded in your own driveway.

Those features alone (along with Quick Charge 3.0 charging) would make this worth $20, but it does have one last trick up its sleeve: A customizable accent light. The LED ring around the USB ports can display 16,000 different colors, and you can choose your favorite from the app to make it perfectly match (or stand out from) your car’s own dashboard lighting. Pretty nifty.

Today’s price is about $4 less than usual, no promo code required.

Tech

via Gizmodo http://gizmodo.com

June 19, 2018 at 06:51AM

Adobe Squeezed the Best Parts of Its Video Editing Suite into a New Mobile App

https://ift.tt/2M0cP9Z

Adobe has embraced mobile photo editing with open arms, releasing robust apps like Lightroom for processing and perfecting your images on a phone. It hasn’t been quite as committed when it comes to mobile support for video production, which is why it’s nice to see its four-year-old Premiere Clip app being eclipsed by its new Project Rush which merges parts of Premiere, After Effects, and Audition into an all-in-one video app that lets producers master videos right from a mobile device.

If you’re unfamiliar with Adobe’s desktop video production tools, Premiere Pro is its video editor, After Effects is a visual effects and motion graphics tool, and Audition is a multi-track editor for audio. Together they cover all the angles of video post-production, but to date have been only available for Macs and PCs.

Back in 2014, Adobe released a streamlined version of Premiere for Android and iOS devices called Premiere Clip, but Project Rush is going to vastly expand what content creators will be able to produce from their mobile devices. However, Project Rush isn’t mobile-only. A desktop version will be available as well, and using Adobe’s cloud capabilities, projects will be automatically synced across devices, allowing users to begin editing on their phone, but then finish a project on their laptop.

Project Rush isn’t going to replace Premiere, After Effects, or Audition altogether. In order for its UI to replicate itself between computers and mobile devices, the capabilities and interface of Project Rush has been streamlined to accommodate smaller touchscreens. Power users of Adobe’s post-production tools will undoubtedly find a lot of functionality missing in Project Rush, but at the same time, it should boost what users can create solely from a handheld device.

Video and audio editing apps have been available for phones and tablets for a while now, so Adobe isn’t breaking new ground there. But complex visual effects software like After Effects has not.

During a brief demo of Project Rush it didn’t appear as if the new app had borrowed AE’s powerful compositing and masking capabilities, but advanced color correction tools was there, as were the motion graphic titling templates that are already available in Premiere Pro. Creating custom animated titles didn’t appear to be an option either—although I’m not sure I’d even want to attempt that on a six-inch screen.

When it comes to audio, Project Rush hands the mastering off to Adobe’s Project Sensei which is the company’s AI-powered automation software. Instead of having to process every bit of audio by hand, Project Sensei will automatically mix and master the sound levels on a user’s behalf, which is an approach we’re seeing creep into more and more of Adobe’s products.

Rounding out Project Rush’s focus on video-production-on-the-go is the ability to publish or share video content on multiple platforms including YouTube, Facebook, and Snapchat, from the app. This includes the ability to specify custom thumbnails, the title and description of the video, and even scheduling if you find yourself finishing in the middle of the night, and don’t want your masterpiece to hit the internet until you wake up in the morning.

Adobe doesn’t have a specific timeline for when Project Rush will be available for download; “later this year” is the only timeline it will commit to. But it will be showing more of the app at the VidCon 2018 conference in Anaheim this week.

[Adobe]

Tech

via Gizmodo http://gizmodo.com

June 19, 2018 at 08:03AM

This Plug Senses Outlets and Glows So You Never Have to Shock Yourself Again

https://ift.tt/2JQOUNJ

Blindly reaching under your desk to find an available outlet to plug in your dying laptop is never a safe idea—I’ve had it go wrong… it was not pleasant. Reaching for a flashlight is a safer approach, but Ten One Design’s new Stella plug is an even better one. It’s got its own built-in flashlight that only turns on when electricity is detected nearby, illuminating the outlet and reducing the risk of getting shocked..

It sounds like sorcery, but electricians already use tools with similar technology so they can quickly assess if a wire is carrying power without having to connect a multimeter. Ten One Design has not only improved the sensitivity with the non-contact voltage sensor it’s incorporated into Stella, the company has also worked to minimize the power consumption of the built-in LED flashlight so that it should run for well over a decade on its integrated battery. You’ll have long replaced your laptop by the time it stops working.

The $35 Stella will be available in two versions for Mac and PC laptops, but the Mac version includes an additional upgrade to the hardware that Apple ships. Ten One Design has included a slide-out clip so that when the Stella is connected to your MacBook’s power brick, you have a better way to wind and secure its braided power cord. It’s a small improvement, but one that MacBook users might actually be even more excited for than Stella’s automatic flashlight.

Tech

via Gizmodo http://gizmodo.com

June 19, 2018 at 08:03AM