Tim Sweeney wants Unreal to power the cross-platform revolution

http://ift.tt/2puLvXG

It’s 2018 and developers are finally taking mobile games seriously — or it’s the other way around, depending on whom you ask.

Epic CTO Kim Liberi jumps in and adds, "I think it’s almost the other way, I think it’s that mobile developers are taking games more seriously."

Either way, the mobile game market has shifted drastically over the past few years, and today major developers are building massive experiences for tiny screens, often putting fully fledged PC and console titles directly on handheld devices. Think Fortnite, Ark: Survival Evolved, PlayerUnknown’s Battlegrounds and Rocket League. All of these games, and countless others, run on Unreal, Epic’s engine (and Fortnite is Epic’s in-house baby, of course).

Running on Unreal means these games can play across all platforms with relative ease — the same code is powering the PlayStation 4, Xbox One, Nintendo Switch, PC and mobile editions of each game. It’s the same title across all platforms.

That means there’s no reason, say, Xbox One and PlayStation 4 players can’t link up and jump into games together. Well — there’s no technical reason. Sony has long been a holdout in this space, refusing to allow cross-console play. Both Microsoft and Nintendo are open to the idea, while the PC and mobile markets have been primed for years.

It’s not going to make sense from a business perspective, either, Sweeney argues.

"We’re one link away from having it all connected."

Epic is supporting the cross-platform trend with Unreal Engine. The latest version will make it easier for developers to bring their console or PC games to mobile devices, using Fortnite as a successful case study. Another improvement heading to Unreal 4.20, which lands for developers this summer, is a new live record and replay feature. This allows players to cinematically view and edit their gameplay after the match is done — not only allowing serious players to study their strategies, but also empowering YouTubers and Twitch streamers to create movie-like highlight reels.

Coming soon

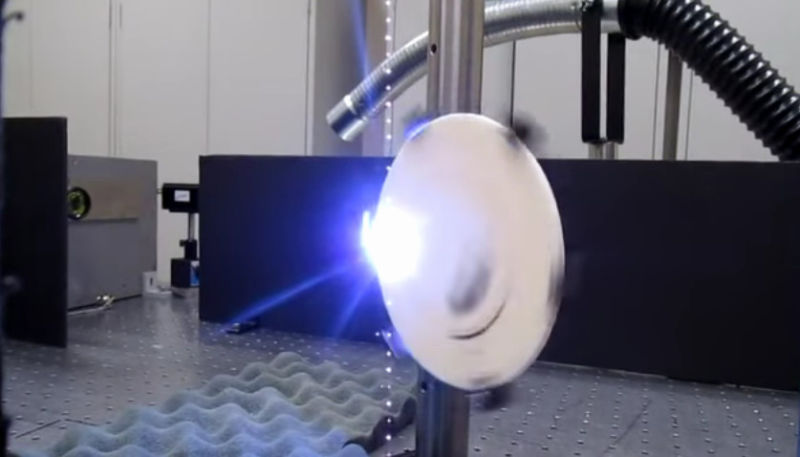

Looking to the future, Epic is working on fine-tuning high-end graphics capabilities and motion-capture animation processes — these are things that major, AAA developers might use. Partnering with NVIDIA and Microsoft on new ray-tracing technology, at GDC Epic showed off a demo in the Star Wars universe and featuring the technique running in real-time. The quality was stunning, but this kind of tech isn’t quite ready for everyday consumers.

As Liberi explains it, "It’s running on a quite powerful piece of hardware right now because experimental technology runs on a –"

"It’s one PC with four GPUs," Sweeney chimes in.

"Four GPUs, yeah. Nvidia DGX-1 with four GPUs."

That’s certainly not what most folks have at home, but the tech should catch up to accessible gaming hardware in the near future.

Epic Games

In other news of a visually striking nature, Epic also developed a real-time motion-capture animation system in partnership with 3Lateral. Using the company’s Meta Human Framework volumetric capture, reconstruction and compression technology, Epic was able to digitize a performance by actor Andy Serkis in a shockingly lifelike manner — in real-time and without any manual animation. The technology also allowed Epic to seamlessly transfer Serkis’ performance (a MacBeth monologue) onto the face of a 3D alien creature.

Partnering with 3Lateral, Cubic Motion, Tencent and Vicon, Epic also showed off Siren, a digital character rendered in real-time based on a live actress’ performance.

"[3Lateral] is the company that actually builds the digital faces that then we work out how to make them look realistic in the engine," Liberi says. "What they’re able to do is what they call four-dimensional capturing, which is like a scan but it’s a scan that moves over time. Because of that, they’re able to refine the facial animation systems for the digital human to get all the nuances of every wrinkle, how every piece of flesh moves."

Click here to catch up on the latest news from GDC 2018!

Tech

via Engadget http://www.engadget.com

March 21, 2018 at 12:24PM

![Unreal Engine + $60,000 GPU = Amazing, real-time raytraced Star Wars [Updated] | Ars Technica](https://cdn.arstechnica.net/wp-content/uploads/2018/03/Reflections_03_2560x1400-760x380.png)